Review: TensorFlow shines a light on deep learning

Google's open source framework for machine learning and neural networks is fast and flexible, rich in models, and easy to run on CPUs or GPUs

-

TensorFlow r0.10

What makes Google Google? Arguably it is machine intelligence, along with a vast sea of data to apply it to. While you may never have as much data to process as Google does, you can use the very same machine learning and neural network library as Google. That library, TensorFlow, was developed by the Google Brain team over the past several years and released to open source in November 2015.

TensorFlow does computation using data flow graphs. Google uses TensorFlow internally for many of its products, both in its datacenters and on mobile devices. For example, the Translate, Maps, and Google apps all use TensorFlow-based neural networks running on our smartphones. And TensorFlow underpins the applied machine learning APIs for Google Cloud Natural Language, Speech, Translate, and Vision.

Data flow graphs are directed acyclic graphs that describe a computation for TensorFlow. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) that flow between them. This flexible architecture lets you deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device without rewriting code. In addition to the library, TensorFlow includes an interactive program for displaying data flow graphs, TensorBoard.

The principal language for using TensorFlow is Python, although there is limited support for C++. The tutorials supplied with TensorFlow include applications for handwritten digit classification, image recognition, word embeddings, recurrent neural networks, sequence-to-sequence models for machine translation, natural language processing, and PDE (partial differential equation)-based simulations.

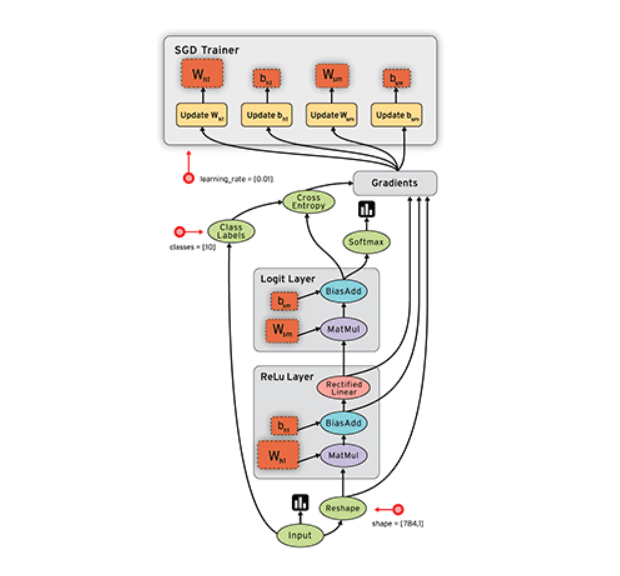

This is Google’s animated illustration of how tensors flow from node to node through the edges of a data flow graph.

TensorFlow currently runs on Ubuntu Linux, MacOS, Android, iOS, and Raspberry Pi, using Python 2.7, 3.4, or 3.5. Nvidia CUDA GPUs are supported on Linux and MacOS but not required. Google supplies Docker images for TensorFlow both with and without GPU support.

Google offers TensorFlow as a service as part of the Cloud Machine Learning Platform, along with Cloud Dataflow. The Cloud Machine Learning Platform is currently in limited preview and considered to be in alpha test. TensorFlow is also available for use in Cloud Datalab, a beta cloud service and Docker image that is based on Jupyter (formerly IPython) notebooks.

TensorFlow features

Google touts six key advantages for TensorFlow: deep flexibility, true portability, the ability to connect research and production, autodifferentiation of variables, language options, and the ability to maximize performance by prioritizing GPUs over CPUs. Let’s try to understand what these mean and why they are important.

Deep flexibility. The major point in this area is that a data flow graph isn’t limited to representing neural networks. While there is a lot of support for neural networks in TensorFlow, you are free to use libraries on top of TensorFlow or write your own, in Python or C++. Google provides a good example in the form of a partial differential equation (PDE) solver. This flexibility comes from the carefully layered architecture of the library.

True portability. Because TensorFlow supports CPUs or GPUs and desktop, server, container, and mobile computing platforms, you have a lot of options about where you run it. There are limits, however. Some of the training tasks you might want to perform with TensorFlow require significant hardware performance if you want them to converge in a reasonable amount of time, such as a multi-CPU machine with CUDA GPUs or a Google Tensor Processing Unit (TPU), which is a custom ASIC. (No, mere mortals can’t use TPUs -- yet. But Google is exploring what would make sense.)

For example, a relatively simple convolutional model for classifying handwritten digits from the MNIST data set takes half an hour to train on a single 2.6GHz Intel Core i7. If you train the same model with one or more recent Nvidia GPUs and the latest CUDA SDKs and deep neural network libraries, it will go much faster -- how much faster depends on the number and level of the GPUs and the efficiency of the libraries. If you train the same model on a TPU, it will take a few minutes. By the same token, it is reasonable to run predictions from a TensorFlow model on an Android or iOS device or a Raspberry Pi, but not to train the model on a mobile device.

Connect research and production. This point flows from portability, but it’s an improvement on previous practice. Not long ago, researchers designed machine learning models in programs intended for individual use (Matlab and Mathematica come immediately to mind, but there are many), then the models had to be rewritten, trained, and deployed using scalable technologies (for example, Python and C++ programs). By contrast, TensorFlow supports researchers developing algorithms, as well as product teams training models and deploying them for customers at scale, all with the same code.

Autodifferentiation. Many training algorithms rely on gradient descent in one form or another, often stochastic gradient descent (SGD). A gradient is a vector of partial derivatives, so before you can implement a gradient-based algorithm, you need a way to differentiate one variable with respect to all the other variables of interest. TensorFlow does that for you, letting you move on to more interesting problems. The derivative computation extends your graph, and you can see that when you view your graph in TensorBoard. This capability is not unique to TensorFlow, but it’s very nice to have.

Language options. TensorFlow is currently at the Model T stage of its language options: You can work in any language you want, as long as it’s Python. OK, that’s an exaggeration. There’s a documented limited subset of the API that can be used from C++ and an undocumented Go language directory in the repository that appears to implement a Go API on top of the C++ API.

Maximize performance. Google says, “TensorFlow allows you to make the most of your available hardware. Freely assign compute elements of your TensorFlow graph to different devices, and let TensorFlow handle the copies.” I haven’t done this yet myself; it’s covered in the Distributed TensorFlow and Using GPUs tutorials. Unless you have your own datacenter, you might want to consider deploying TensorFlow servers and clusters in one of the public clouds.

Installing TensorFlow

I installed TensorFlow and all its dependencies on a MacBook Pro running OS X El Capitan, using the Python 2.7 pip and easy_install utilities, as well as the Homebrew installation manager. I also checked out a few TensorFlow source code repositories from GitHub. Though my MacBook has a supported Nvidia GPU, I ran into trouble trying to get the GPU version of TensorFlow to work and wound up installing the slower CPU version. Because of conflicts in dependencies, it took me about seven tries; I eventually used the --ignore-installed flag to get pip to complete the process. (My woes related to an incompatibility between the Xcode 8.0 beta I was running and the CUDA compiler. Your sailing will likely be smoother.)

I installed Jupyter Notebooks as well. Jupyter is not a dependency of TensorFlow but is used by several of the TensorFlow samples. I ran into errors doing that with pip, but was able to succeed using easy_install.

I suspect that I’ll be able to upgrade to the GPU version in the future once I (and the three vendors) resolve version conflicts among Xcode, the CUDA SDK and CUDA neural network libraries, and TensorFlow.

There are four other methods to install TensorFlow: Virtualenv, Anaconda, Docker, and from source code. Virtualenv gives you an isolated Python environment. Once you are at an active Virtualenv command line, you can install TensorFlow with pip. That extra step might be worth the effort if you have version conflicts between TensorFlow dependencies and dependencies of other Python programs you have installed.

Anaconda is a large Python distribution with lots of numerical and scientific packages and its own environment system. Once you have Anaconda installed, you can either install TensorFlow with pip or Conda. Again, the extra step might be worth the effort -- and if you do it, you should install the latest Python 3 versions of Anaconda and TensorFlow.

Installing TensorFlow in Docker is simple and quick, and you get an isolated, virtualized environment. You also get a Jupyter Notebook server that you can access from a browser, along with a few preconfigured notebooks. The disadvantage of using Docker on your own computer is that it will only get part of your CPU and RAM, which means that iterating a neural network model or something else complex can take a long time. On the other hand, you can run a Docker image in the cloud and get as much in the way of computing resources as you are willing to rent.

Building TensorFlow from sources creates a current pip wheel; you then install that instead of pulling a prebuilt wheel downloaded from Google Cloud Storage. Using the most current source code from the repository may fix some bugs for you; it may also introduce new bugs. Caveat coder.

Running TensorFlow

Assuming that you are using Python from the command line, a very basic interactive TensorFlow session looks like this:

$ python...>>> import tensorflow as tf>>> hello = tf.constant('Hello, TensorFlow!')>>> sess = tf.Session()>>> print(sess.run(hello))Hello, TensorFlow!>>> a = tf.constant(10)>>> b = tf.constant(32)>>> print(sess.run(a + b))42>>> exit()

To run a command-line sample, you might do the following:

$ python -m tensorflow.models.image.mnist.convolutional

The -m switch in the line above means that Python should load and run its argument as a library module script. You could also navigate to the correct directory and omit the switch. By the way, this script is the one that took about a half-hour to complete on my MacBook Pro (CPU-only).

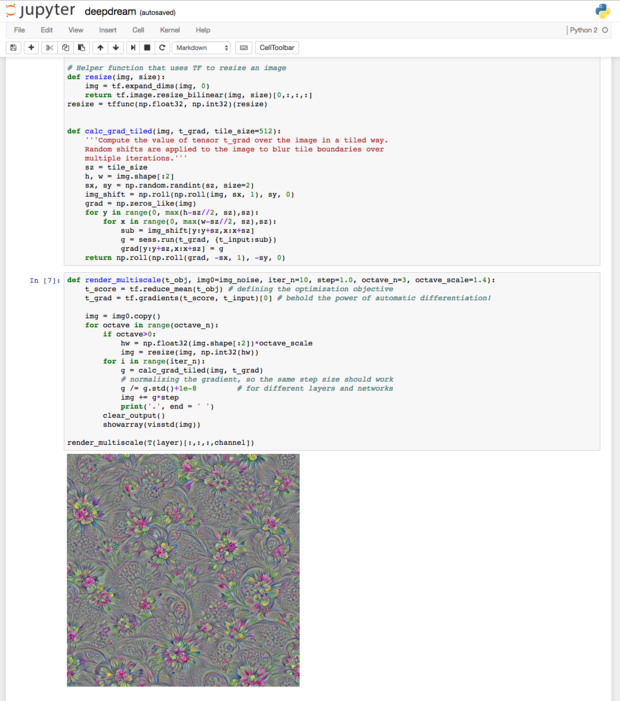

If you prefer to run a Jupyter notebook, navigate to the directory where your notebook resides, and from the command line type jupyter notebook. You should see a directory come up in your browser, from which you can pick an existing notebook, such as the DeepDream notebook in the figure below, or you can create a new notebook for your own work.

Jupyter Notebook displaying a TensorFlow DeepDream example. Functions with tf prefixes belong to TensorFlow, as do functions with sess prefixes. Functions with np prefixes belong to NumPy.

You can also get a Jupyter notebook by running a TensorFlow Docker image. The command line is as follows:

docker run -it -p 8888:8888 gcr.io/tensorflow/tensorflow

The -p switch exports the first port listed from Docker to the second port listed on the host. Then you can open the notebooks that reside inside the Docker image from a browser tab on your host by browsing to localhost:8888 if the image is running locally, or port 8888 of the correct IP or DNS address if it is running remotely.

In a Jupyter notebook or other interactive session, you may use the tf.InteractiveSession() constructor instead of tf.Session(). The subsequent code is a little less verbose.

This Jupyter notebook is one of the three supplied in the TensorFlow Docker image. This code is doing a basic gradient descent optimization of a neural network learning from a small dataset.

Besides the library and samples, TensorFlow includes a suite of visualization tools called TensorBoard, as shown in the figure below. You can use TensorBoard to visualize your TensorFlow graph, plot quantitative metrics about the execution of your graph, and show additional data like images that pass through it. I've only recently begun with TensorBoard, but it looks to be a nice tool once your TensorFlow sessions are configured to log the necessary information. You can try it out online even without having TensorFlow installed.

TensorBoard displaying the graph of a TensorFlow calculation. We have zoomed in on several sections to examine the details of the graph.

TensorFlow demos, tutorials, and models

There’s so much to learn about TensorFlow that, for the purposes of this article, all I can do is briefly discuss the materials that Google presents and outline a path through them. By the way, Google is actively working on adding more tutorial material and structuring it better.

You can get a feel for neural networks on the web, without installing anything, at the TensorFlow Playground. This example doesn’t use TensorFlow, however. Consider it to be background to build your intuition about neural networks.

| InfoWorld Scorecard |

Models and algorithms (25%)

|

Ease of development (25%)

|

Documentation (20%)

|

Performance (20%)

|

Ease of deployment (10%)

|

Overall Score (100%)

|

|---|---|---|---|---|---|---|

| TensorFlow r0.10 | 9 | 8 | 9 | 10 | 8 |