Tech giants such as Amazon, Facebook, and Google have redefined IT for web-scale applications, leveraging standard servers and shared-nothing architectures to ensure maximum operational efficiency, flexibility, and reliability. As new application workloads – cloud, mobile, IoT, machine learning, and real-time analytics – drive the need for faster and more scalable storage, enterprises are seeking to optimize their infrastructures in the same way as these tech giants.

Excelero’s NVMesh—a unified, scale-out, software-defined, block storage solution that runs on industry standard servers—was designed in response to these needs. NVMesh leverages NVMe high-performance flash, a highlydistributed architecture, intelligent clients that minimize cluster communications, and a proprietary storage access protocol that bypasses the CPU on storage targets.

The result is a high-performance block storage system, with extremely low latency overhead. In a nutshell, server access to remote storage devices is a mere 5 microseconds (µs) slower than access to local devices, and that overhead is mostly in the network. Further, NVMesh can be flexibly deployed as converged (combining compute and storage) or “disagreggated” (storage server or JBOD) infrastructure, and scales simply and granularly by adding drives or nodes to the cluster.

As storage has moved from the mechanical world of hard drives to the silicon world of SSDs, we have experienced rapid improvements in performance, capacities, and reliability. Today, the absolute tops in flash storage interfaces is NVMe, which is powered by a much faster protocol that eliminates the bottleneck of ATA and SCSI.

NVMe essentially gives us a much better way to access the performance of flash – both today’s SSDs and future innovations. By being placed on the PCIe bus, NVMe opens up a whole new range of opportunities. It can provide the performance, latency, and power efficiencies required by today’s massive web and supercomputing applications as well as powerful data analytics environments such as industrial IoT.

In this article we’ll take a deep dive into the technology that Excelero engineers developed to take advantage of NVMe and the paradigm shift to server-based, software-defined storage.

Introducing NVMesh

Excelero designed NVMesh to meet the block storage requirements of modern scale-out applications, leveraging state-of-the-art flash on standard servers. The key requirements for such environments are:

- Performance that is akin to locally attached high-performance flash

- Optimal cost/performance ratio

- Support for highly variable application loads and ubiquitous access

- Support for converged environments while avoiding noisy neighbor problems

- Handling of all failure scenarios at the software level including cross-rack failover and online upgrades

- Support for environments hosting multiple applications with disparate performance, consistency, and availability requirements

NVMesh enables customers to design server-based SAN infrastructures for the most demanding enterprise and cloud-scale applications, leveraging standard servers and multiple tiers of flash. The primary benefit of NVMesh is that it enables converged infrastructure by logically disaggregating storage from compute.

NVMesh features a distributed block layer that allows unmodified applications to use pooled NVMe storage devices across a network at local speeds and latencies. Distributed NVMe storage resources are pooled with the ability to dynamically create arbitrary virtual volumes that can be striped, mirrored, or both. Volumes can be spread over multiple hosts and utilized by any host running the NVMesh block client. In short, applications can enjoy the latency, throughput, and IOPs of a local NVMe device while at the same time getting the benefits of centralized, redundant, and centrally managed storage.

A key component of Excelero’s NVMesh is the patented Remote Direct Drive Access (RDDA) functionality, which bypasses the CPU and, as a result, avoids the noisy neighbor effect on application performance. The shift of data services from centralized CPU to complete client-side distribution enables linear scalability, provides deterministic performance for applications, and allows customers to maximize the utilization of their flash drives.

NVMesh is deployed as a virtual, distributed, non-volatile array. It supports both converged and disaggregated architectures, giving customers freedom in their architectural design.

NVMesh components

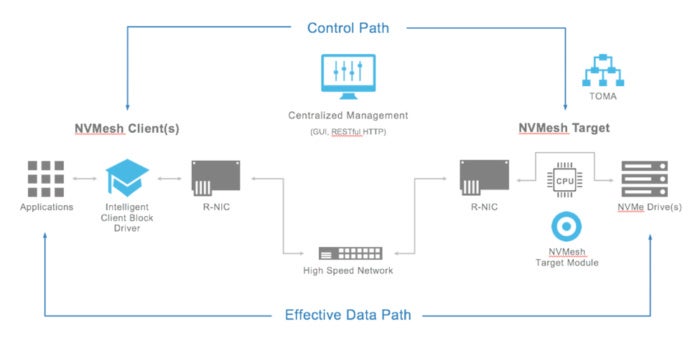

NVMesh consists of four main software components: the NVMesh Target Module, the Topology Manager (TOMA), the Intelligent Client Block Driver, and the centralized management system.

The NVMesh Target Module identifies storage hardware, such as NVMe drives and compatible NICs, and sets up RDDA pathways into the NVMe drives on behalf of the storage clients. The module runs on target nodes, which are physical nodes containing non-volatile storage elements to be shared. Once the Target Module sets up the connections from clients to the NVMe drives, it “steps back” and hence is not in the data path. The Target Module is also involved in error detection and handling.

Excelero

ExceleroThe Topology Manager, which comes bundled with the target module and runs on the same hardware elements, executes in user space and provides volume control plane functionality. TOMA tracks the activity of drives and storage target modules to maintain seamless volume activity upon element failure. Upon recognizing a failure, the topology manager performs the actions required to ensure data consistency. It also manages and engages in recovery operations upon element resumption or replacement.

The Intelligent Client Block Driver implements block device functionality for storage consumers. This software runs on client nodes. A host or node that has one or more NVMe devices to share and also participates as a client is called a converged node. Converged nodes run both the block storage client and the storage target module. If desired, the storage management module can also run on a client, target, or converged node.

The centralized management system provides, yes, configuration and monitoring functionality. In addition to a web GUI, it provides a RESTful API management interface for integration with data center management systems and orchestration mechanisms.

Separate control and data paths

Being a true software-defined storage solution, NVMesh separates the control path and data path. Most of NVMesh’s control path functionality is executed by the storage management modules, which avoid broadcasting management communications to ensure scalability. The control path functionality includes:

- Maintaining the non-volatile storage device inventory

- Defining volumes, via GUI or programmatically (RESTful API)

- Attaching and detaching volumes to block storage clients, presenting them as local block devices

- Reporting and statistics

The data path serves the actual storage I/O operations, which are sent from the block storage client to the storage devices using several mechanisms. NVMe devices are accessed at local speeds using Excelero’s patented RDDA protocol. Other target block devices, such as SATA SSDs, are accessed using standard RDMA queue pairs to interact with the storage target module that will subsequently perform the I/O operation. NVMf devices are accessed directly using the NVMf protocol.

RDDA vs. NVMf

Besides Excelero’s RDDA protocol, NVMesh supports the open protocol NVMf as a wire transport. NVMf may be preferable from the standards perspective, but is less desirable in terms of performance overhead, as using it will require invocation of target side software (and CPU). RDDA typically provides better access latency without requiring target-side CPU usage.

Employing RDDA provides an immediate latency benefit of 10 microseconds (or more) compared to kernel-based NVMe-over-Fabrics (more on performance below). RDDA significantly reduces load on the target side by avoiding running the kernel software stack including potentially computationally expensive interrupt handling.

Lastly, NVMf is a low latency protocol for NVMe over a network fabric—it’s not a storage solution. The NVMf client and target allow you to access drives remotely, but do nothing for adding redundancy, logical volumes, failover, or centralized monitoring and management.

A key benefit of RDDA is that it bypasses the CPU on targets. As a result, planning resource allocation for applications is simpler and more consistent. A second benefit is the ability to spread data across failure zones for high availability, which calls for distributed data layout. Client-side storage service implementation enables single hop data access also when the data is spread across disparate physical entities.

Scale-out architecture

Excelero NVMesh was designed to scale naturally. Targeting larger data centers with upwards of 100,000 converged nodes, NVMesh was designed to avoid any centralized capability in the data path that could become a bottleneck. This means no centralized metadata or lock management. Client, target, and management functionalities are built as scale-out technologies as well. Because it is critical to ensure that the networking pattern supports web-scale deployments, clients are completely independent. Knowledge sharing among clients is rare and occurs indirectly and anonymously via targets. Client independence ensures easy client scaling.

Storage targets communicate only with other targets that are protecting the same data. Volumes are spread out in a way that minimizes the amount of such communication. In contrast, other products spread the volumes in a way that has every node mirroring something from every other node. Typically, a target will have to communicate with only a handful of other nodes, even in a large-scale environment, due to this volume allocation strategy.

Further, cross-target communication is limited to keep-alives, quorum communication, and rebuilds. Data protection is done by the clients themselves, with the number of elements participating in data protection of any specific stored data element limited to reduce system chatter and enable wide scaling.

Centralized management is comprised of a stateless web serving framework (Node.js) and a transactional, scale-out data store (MongoDB) on the back end. This ensures that the management framework has the required scale-out and failover characteristics to support the largest data centers.

The client-target communication pattern rests on the intelligence of the Intelligent Client block driver. Clients know to approach the correct group of targets thereby reducing both the number of network hops and the number of communication lines. The result is that the number of connections is a small multiple of domain size.

Proof in the performance

The performance of storage systems is influenced by many elements, including the storage hardware components, the servers, the network, and the efficiency of the software. NVMesh was designed to let applications enjoy the full performance, capacity, and processing power of the underlying servers and storage. The software was designed to add virtually no latency and not impact the target CPU. As NVMesh is a pure software-defined storage solution, performance varies with choices made by the customer. To give an idea of what NVMesh is capable of, we provide a brief summary of tests performed during a live demo for an audience of tech analysts:

The tests were performed on about $13,000 worth of hardware, including

- One Supermicro 2028U-TN24RT+ 2U dual-socket server with up to 24 NVMe 2.5-inch drive slots

- Two 2x100Gbs Mellanox ConnectX-5 100Gbs ethernet/RDMA NICs

- 24 Intel 2.5-inch 400GB NVMe SSDs

- One Dell Z9100-ON switch supporting 32x100Gbs QSFP28 ports

The system generated 2.5M IOps for 4KB random writes and 4.5M IOps for 4KB random reads. Random write average response time was less than 350 microseconds and the random read average response time was 227 microseconds. During the 4.5M IOps random read benchmark, the storage target CPU was at 0.3 percent utilization (mostly running Linux services to display process status).

A new generation of storage

Excelero NVMesh is a software-defined storage solution designed to meet the requirements of web-scale applications. It leverages state-of-the-art NVMe flash and industry standard servers to deliver consistent, low-latency, high-performance block storage that also meets the cost, scalability, flexibility, and reliability requirements of the modern data center.

NVMesh facilitates performant and cost-effective converged deployments by avoiding noisy neighbor disruptions and effectively disaggregating compute and storage logically without having to do so physically. The key is a new architecture that combines direct access to remote storage devices by clients, a client-side storage service implementation, and a scalable communication pattern.

Scalability is achieved by careful design choices for the communication patterns and scale-out components for the management, control, and data planes. And by providing a block storage API and volume definition flexibility, NVMesh allows administrators to decide how to balance performance, data layout, and availability in the way that meets their requirements.

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.